I’ve been cleaning a lot of the cruft out of my domains lately. Subdomains, development domains, MySQL databases originally setup to stage all sorts of nefarious dealings… they’ve all been pulled up by the roots and tossed into heaping piles of gzipped tarballs.

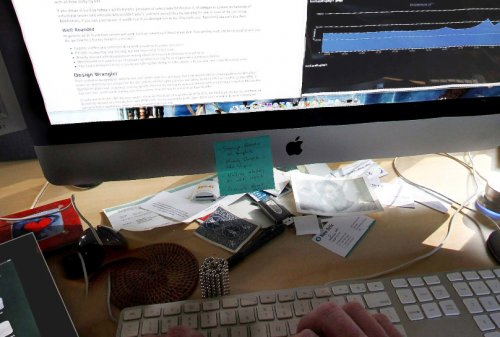

As part of this activity I’ve been cleaning out my Google Analytics account as well, as many of my analytic site profiles refer to domains long gone, testing procedures long concluded, directions I thought my web interests would go but didn’t. Having just made a Great and Terrible Mistake and irreversibly destroying a trove of information courtesy of the slop that is the Google Analytics interface, I have penned a cautionary tale to let you aware of two of its most dangerous functions: pagination and deletion.

Google Analytics Pagination: Party like it’s 1995 (and your 14.4K U.S. Robotics Sportster just arrived)

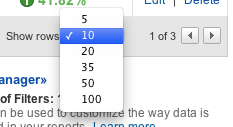

The pagination tool in Google Analytics defaults to displaying only 10 site profiles per page. Using the dropdown menu you can change this to 5, 10, 20, 35, 50 or 100.

An option to display only five profiles per page? What the hell? In what universe would that be useful? Are we seriously so pressed for bandwidth in 2010 that we cannot afford to peer at the world through more than a pinhole? Further, the cognitive load of needing to choose between six freaking options is ridiculous. It’s a modest burden to bear but oftentimes interfaces manage to kill their users not through a single fatal flaw, but through an endless series of tiny papercuts such as this.

Seriously, Google Analytics. If you must have pagination, limit the options to 10, 50 and All. And for all that is holy, remember my choice for at least the duration of my session. Needing to reset the number of rows every time I go back to my profile list is maddening, and the fact that I can’t save this option as a personal setting is driving me insane.

Or would drive me insane, if I hadn’t screwed up in a much bigger way. Pagination in Google Analytics has an additional feature whose destructive tendencies are so finely tuned that they trump even the above critique. To expand on this, we’ll take a quick stroll through the flawed workflow for deleting a site profile.

Deletion: With great power comes insufficient gravity and illustrative consequence surrounding said power.

To delete a site profile, you click the “Delete” link in its corresponding row:

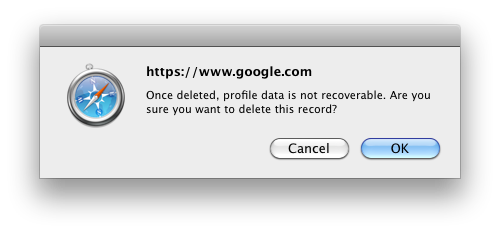

When you click “Delete” a beautiful alert box pops up, a charming implementation of the “Hello World” of any beginner’s javascript tutorial:

In the alert box, the profile that will be deleted is not mentioned by name. It is up to you to remember, guess or assume which profile you originally clicked on. The most prominent information on this alert is the domain of the website that initiated the alert. Is that really the most important thing you need to know at this point, in order to make an informed decision? More important than the fact that the profile data cannot be recovered? More important than the name of the profile that’s actually being deleted?

Also note that “OK” is selected by default, so that pressing the return key will delete the profile. With an action as destructive as the irrecoverable deletion of years worth of information, it’s insanely poor form to select this choice by default.

Perhaps if creating a sensible “Delete” workflow in Google Analytics was as precious as maximizing clickthru rates on text ads, we’d see Google employing the same obsessive levels of testing that the color of hyperlinks currently enjoy. As it stands, all I can say is user experience my ass.

One Plus One Equals Gone

The ambiguous delete tool in Google Analytics, combined with its poorly-executed pagination functionality, creates a perfect storm of destruction. No matter what page you are on, when you click “OK” to confirm the deletion of a profile, Google Analytics redirects you to the first page of your profile list.

(The alert box for confirming the delete action appears over your current page. After clicking “OK” from the alert box you are redirected to the first page, losing the context of your delete action.)

Like most humans, I have a finely-tuned spatial memory. I instinctively take note of where things are located in space, I can predict where they will go, and I can remember where they were. If I’m performing a repetitive task, say spooning food into my mouth, I don’t check my plate after every bite to make sure it hasn’t turned into a bowl of snakes. There is an expectation, born from my experience with physical reality, that the plate and food will remain fairly consistent between mouthfuls such that it doesn’t demand constant conscious consideration. In the words of Heidegger, the spoon, plate and food are ready-to-hand, an extension of myself, part of my world of absorbed coping.

In Google Analytics I had identified two profiles that were outdated, and I moved to delete both of them. Spatially, they were located right next to each other, one after the other. I deleted the first one, and instinctively went to the location of the second one, and deleted it as well. The javascript alert, boldly declaring https://www.google.com/, was promptly ignored because it offered no useful information to confirm.

So long, dear friends.

Well, numerical representations of friends.

Unbeknownst to me, after deleting the first site profile I had been quietly redirected to the first page of my profiles list. And so, it came to pass that I deleted not the profile I intended to delete, but the profile documenting four years of activity here at Daneomatic. Clearly I’m not the first person to have accidentally (and irrecoverably) deleted a profile from Google Analytics.

Dear friends of Daneomatic, I ask that you enjoy your fresh start. Save your comments, I know nothing of you, of your browsers or activities or search terms.

Please, remake yourselves however you see fit. The gentle fellows at You Look Nice Today may offer some valuable suggestions as to how to best use this opportunity.

I, of course, would recommend the Mork from Ork suspenders.