Last summer I began thinking about something that I referred to as “analog interactions”, those natural, in-the-world interactions we have with real, physical artifacts. My interest arose in response to a number of stimuli, one of which is the current trend towards smooth, glasslike capacitive touch screen devices. From iPhones to Droids to Nexus Ones to Mighty Mice to Joojoos to anticipated Apple tablets, there seems to a strong interest in eliminating the actual “touch” from our interactions with computational devices.

Glass capacitive touch screens allow for incredible flexibility in the display of and interaction with information. This is clearly demonstrated by the iPhone and iPod Touch, where software alone can change the keyboard configuration from letters to numbers to numeric keypads to different languages entirely.

A physical keyboard that needed to make the same adaptations would be quite a feat, and while the Optimus Maximus is an expensive step towards allowing such configurability in the display of keys, its buttons do not move, change shape or otherwise physically alter themselves in a manner similar to these touch screen keys. Chris Harrison and Scott Hudson, two PhD students at CMU, built a touch screen that uses small air chambers that allow it to feature physical (yet dynamically configurable) buttons.

From a convenience standpoint, capacitive touch screens make a lot of sense, in their ability to shrink input and output into one tiny package. Their form factor allows incredible latitude in using software to finely tune their interactions for particular applications. However, humans are creatures of a physical world that have an incredible capacity to sense, touch and interpret their surroundings. Our bodies have these well-developed skills that help us function as beings in the world, and I feel that capacitive touch screens, with their cold and static glass surfaces, insult the nuanced capabilities of the human senses.

Looking back, in an effort to look forward.

Much of this coalesced in my mind during my summer in San Francisco, and specifically in my frequent trips to the Musee Mecanique. Thanks to its brilliant collection of turn-of-the-century penny arcade machines and automated musical instruments, I was continually impressed by the rich experiential qualities of these historic, pre-computational devices. From their lavish ornamentation to the deep stained woodgrain of their cabinets, from the way a sculpted metal handle feels in the hand to the smell of electricity in the air, the machines at the Musee Mecanique do an incredible job of engaging all the senses and offering a uniquely physical experience despite their primitive computational insides.

Off the Desktop and Into the World

It’s clear from the trajectory of computing that our points of interaction with computer systems are going to become increasingly delocalized, mobile and dispersed throughout our environment. While I am not yet ready to predict the demise of computing on a desktop (either through desktop or laptop computers alike), it is clear that our future interactions with computing are going to take place off the desktop, and out in the world with us. Indeed, I wrote about this on the Adaptive Path weblog while working there for the summer. Indeed, these interactions may supplement, rather than supplant, our usual eight-hour days in front of the glowing rectangle. This increased percentage of time that a person in the modern world would spend interacting with computing, even through any number of forms and methods, makes it all the more important that we consider the nature of these interactions, and deliberately model them in such a way that leverages our natural human abilities.

Embodiment

One model that can offer guidance in the design of these in-the-world computing interactions is the notion of embodiment, which as stated by Paul Dourish describes the common way in which we encounter physical reality in the everyday world. We deal with objects in the world–we see, touch and hear them–in real time and in real space. Embodiment is the property of our engagement with the world that allows us to interpret and make meaning of it, and the objects that we encounter in it. The physical world is the site and the setting for all human activity, and all theory, action and meaning arises out of our embodied engagement with the world.

From embodiment we can derive the idea of embodied interaction, which Dourish describes as the creation, manipulation and sharing of meaning through our engaged interaction with artifacts. Rather than situating meaning in the mind through typical models of cognition, embodied interaction posits that meaning arises out of our inescapable being-in-the-world. Indeed, our minds are necessarily situated in our bodies, and thus our bodies, our own embodiment in the world, plays a strong role in how we think about, interpret, understand, and make meaning about the world. Thus, theories of embodied interaction respect the human body as the source of information about the world, and take into account the user’s own embodiment as a resource when designing interactions.

Exploring Embodied Interaction and Physical Computing

And so, this semester I am pursuing an independent study into theories of embodied interaction, and practical applications of physical computing. For the sake of fun I am conducting this project under the guise of the Hans and Umbach Electro-Mechanical Computing Company, which is not actually a company, nor does it employ anyone by the name of Hans or Umbach.

In this line of inquiry I hope to untangle what it means when computing exists not just on a screen or on a desk, but is embedded in the space around us. I aim to explore the naturalness of in-the-world interactions, actions and behaviors that humans engage in every day without thinking, and how these can be leveraged to inform computer-augmented interactions that are more natural and intuitive. I am interested in exploring the boundary between the real/analog world (the physical world of time, space and objects in which we exist) and the virtual/digital world (the virtual world of digital information that effectively exists outside of physical space), and how this boundary is constructed and navigated.

Is it a false boundary, because the supposed “virtual” world can only be revealed to us by manipulating pixels or other artifacts in the “real” world? Is it a boundary that can be described in terms of the aesthetics of the experience with analog/digital artifacts, such as a note written on paper versus pixels representing words on a screen? Is it determined by the means of production, such as a laser-printed letter versus a typewriter-written letter on handmade paper? Is a handwritten letter more “analog” than an identical-looking letter printed off a high-quality printer? These are all questions I hope to address.

Interfacing Between the Digital and Analog

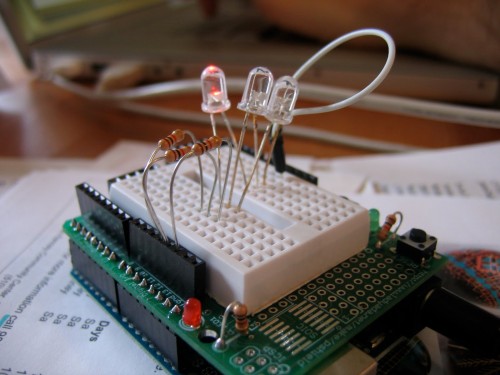

I aim to explore these questions by learning physical computing, and the Arduino platform in particular, as a mechanism for bridging the gap between digital information and analog artifacts. Electronics is something that is quite unfamiliar to me, and so I hope that this can be an opportunity to reflect on my own experience of learning something new. Given my experience as a web developer and my knowledge of programming, I find electronics to be a particularly interesting interface, because it seems to be a physical manifestation of the programmatic logic that I have only engaged with in a virtual manner. I have coded content management systems for websites, but I have not coded something that takes up physical space and directly influences artifacts in the physical world.

Within the coding metaphor of electronics, too, there are two separate-but-related manifestations. The first is the raw “coding” of circuits, with resistors and transistors and the like, to achieve a certain result. The second is the coding in Processing, a computer language, that I write in a text editor and upload to the Arduino board to make it work its magic. Indeed, the Arduino platform is an incredibly useful tool for physical computing that I hope to learn more about in the coming semester, but it does put a layer of mysticism between one and one’s understanding of electronics. Thus, in concert with my experiments with Arduino I will be working through the incredible Make: Electronics: Learning by Discovery book, which literally takes you from zero to hero in regards to electronics. And really, I know a bit already, but I am quite a zero at this point.

In Summary

Over the next few months I aim to study notions of embodiment, and embodied interaction in particular, in the context of learning and working with physical computing. As computing continues its delocalization and migration into our environment, it is important that existing interaction paradigms be challenged based on their appropriateness for new and different interactive contexts. The future of computing need not resemble the input and output devices that we currently associate with computers, despite the recognizable evolution of the capacitive touch screen paradigm. By deliberately designing for the embodied nature of human experience, we can create new interactive models that result in naturally rich, compelling and intuitive systems.

Welcome to the Hans and Umbach Electro-Mechanical Computing Company. It’s clearly going to be a busy, ambitious, somewhat dizzying semester.

4 Comments

This is great Dane, and I can’t wait to see the work that you produce.

Beautifully written. I’m eager to see more from Hans and Umbach!

BTW, Dane, this weekend I was reminded of the now defunct Interaction Design Institute of Ivrea — which I became somewhat obsessed with around 2005.

Yesterday, I was reading up on their short-lived history, and it seems they had a hand in developing Arduino and Processing. My metaphorical Ivrea boner grew a little bigger.

Ahh shucks, thanks you guys!

@sally Yeah! I was just reading about the history of Arduino, and it sounds like it popped out of those Ivrea folks right around 2005. While reading this academic paper by Saul Greenberg published in 2001, I couldn’t help but think that the Arduino peepz totally channeled its thinking and rationale in their design of the platform.