Our interests in embodied interaction started almost a year ago, as we spent the summer in San Francisco. Confronted by the overwhelming colors and textures of a real living-and-breathing (and, based on olfactory sensations, clearly excreting) city, we realized how malnourished our computer-mediated interactions were, compared to the rich sensory experience of the real, physical world. Additionally, our time at the Musée Mécanique made us appreciate the aesthetic experience of real physical artifacts, of tactile materials like warm wood cabinets and cold metal handles. We liked heavy dials you had to twist, piano scrolls that spun, machines that would hum and shake as their gears and belts within worked their magic.

There is something different, something tangibly different, about real objects in real space around you. Sound that emanates from two metal pieces clanging together in real space is so much more satisfying than a recording played through a speaker. That they move, that they displace the air around them, the same air that you breathe, is just one of the ways we seem inexplicably tied to the physical realm.

Electronics as a tool for extending computation into the physical world.

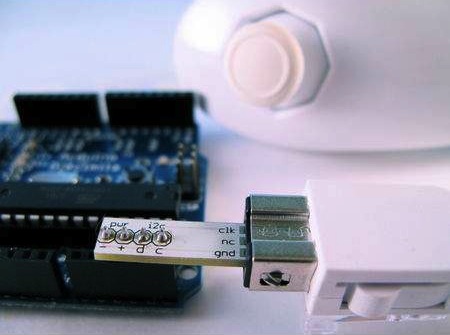

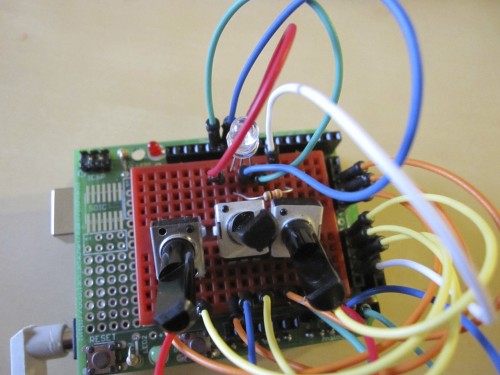

This was the goal as we began our inquiry; how to create physical interactions, that exist in the real world and involve the manipulation of real artifacts, that are invisibly-backed by the strengths of modern computation and network technology. In our efforts to reintroduce interaction to the space around us we learned electronics, we experimented with Arduino, and we took our knowledge of programming and extended it into interactive electronic artifacts that existed in the world with us.

As we tinkered with electronics we quickly discovered that all of the subtlety and nuance, as well as challenges, that go into designing “digital” interactions are present in physical computing, only amplified because we were now considering both an electronic and physical layer. These are two layers that, say, when you build a website or application, you take for granted. With Arduino they become your responsibility, subject to whatever limited grasp you may have with the subject area. We are disappointed by the limited progress we made in learning electronics, but we certainly have a renewed appreciation for people with the wide array of skills necessary to make not only functional, not only good, but great computationally-backed physical interactions.

From a theoretical perspective, our original interests were to understand why these physical in-the-world interactions are so fulfilling and evocative, and why our virtual interactions feel so vapid in comparison. Our goal was to explain why these physical interactions should earn a privileged seat at the table, while virtual interactions should be sent to their room.

Tangible computing: digital information, physical interaction.

As we sifted through the layers of theory on embodiment, we realized we needed a better understanding of what defines a virtual interaction, and how it is different from a physical one. Traditionally, a virtual/digital interaction involves a screen comprised of pixels, with a keyboard and pointing device used for controlling the interface. Tangible computing has worked to categorize interactions, characterizing certain ones as ‘tangible’ and other ones as ‘digital’. Indeed, with ambient computing Ishii and Ullmer have dedicated much of their work to studying how we can render ‘digital’ information, or ‘bits’, in ‘physical’ space. A number of authors have sought to define tangible computing in a manner that differentiates it from ‘regular’ computing. A few common tenets:

- Tangible computing unifies input and output surfaces. Instead of a keyboard that adds characters to a screen, or instead of a mouse that moves a cursor, tangible computing offers a new interactive model where the input mechanism and output display are one and the same artifact.

- Tangible computing affords direct manipulation. Instead of mapping the physical space my mouse occupies to the virtual space on the screen, the physical object I am working with can be grasped, turned and shaped in order to change its unified output characteristics.

Three Flavors: Data-Centered, Perceptual-Motor-Centered, Space-Centered

Hornecker outlines three primary views of tangible interaction. The work of Ishii and Ullmer concerns a data-centered viewpoint, where physical artifacts are computationally-augmented with digital information. A second is a perceptual-motor-centered view of tangible interaction, which aims to leverage the affordance and rich sensory experience of physical objects. As championed by Djajadiningrat and Overbeeke among others, this view of tangible interaction emphasizes the expressive nature of human movement. Finally, Hornecker subscribes to a space-centered view of tangible interaction, which involves embedding virtual displays in real-world spaces.

I subscribe to a perceptual-motor-centered view of tangible interaction.

And I believe that the data-centered and space-centered views are absolute nonsense.

Let’s talk about digital and virtual.

Both the data-centered and space-centered views of tangible computing make reference to a ‘digital’ or ‘virtual’ world of information. These concepts are familiar enough, and we all have a gut feeling as to what they mean. Digital information is ones and zeroes. It lives in a computer or on a server somewhere. It is ephemeral, existing without really existing, and can be infinitely accessed, copied, reproduced and distributed without loss. It’s what got the music industry’s panties in a bundle.

The virtual world is the world in which this ‘digital’ information exists, and it lacks many of the familiar characteristics of the physical world. Things don’t actually ‘exist’ in the virtual world. A photo on Flickr is not a photograph in real life. You don’t need to be co-present with the photo in order to see it. Multiple people can look at the same photograph at the same time, without being aware of one another.

The metaphors we use to describe digital information and the virtual world emphasize its distributed, ephemeral nature. Deleted files disappear “into the ether” and we pull things down from “the cloud.” Like an atmosphere that envelops us, we think of it as existing independent of us, independent of the moments we perceive it through our devices.

Nevertheless, digital and virtual are only conceptual metaphors. They do not describe the objective qualities of our networked, computational systems, but rather our subjective framing of them. They are extremely effective metaphors, yes, as characterized by their widespread use and prevalence in thought. But it is nonsense, utter nonsense, to claim that the virtual world exists, and is any different than the physical world.

There is no digital information. There is no virtual world. There is only the physical world, where we encounter mediated instances of so-called ‘digital’ information.

You never see, nor interact with, a virtual world. There is no such thing as a virtual display. The idea of augmenting real, physical objects with digital information is meaningless, as is the idea of augmenting physical spaces with virtual displays.

If a tree falls in the forest and no one hears the sound, it does not make a sound.

My mind’s telling me virtual, but my body says physical.

All of your interactions with the ‘virtual’ world are necessarily mediated by whatever system or tool you are using to access it. This post, for instance, is not virtual. It is a collection of physical pixels emitting physical photons of light, which enter your eye in a pattern that your brain recognizes and interprets as text. This is the case if you are reading this on your laptop, your phone or your iPad. If you want to comment on this post, you will perhaps press physical keys on your laptop to make recognizable characters appear on your physical screen, until they are in an amount and order you deem satisfactory.

Perhaps you will comment by pecking this out on an iPhone or iPad’s ‘virtual’ keyboard. Again, just because the keyboard is rendered on a screen, comprised of pixels, does not mean that it is virtual. Just because there isn’t tactile feedback (technically there is tactile feedback, as your finger doesn’t pass through the device like a ghost, but it may be feedback that doesn’t meet your expectations for a keyboard) doesn’t mean it isn’t physical.

You never have direct, unmediated access to what is metaphorically described as the virtual world. All of your interactions with ‘virtual’ information are necessarily physical, necessarily tangible, and therefore embodied. Thus, anyone who claims that tangibility is a new agenda for computing is sadly mistaken. Tangibility has always been core to our ability to interact with and experience computational devices, from pixels to keyboards to touch screens.

All interactions are not created equal.

The arguments over classification, determining what ‘is’ a tangible interaction versus what ‘is’ a virtual interaction, are completely misguided. All interactions are tangible, all interactions are physical, all interactions are embodied, but all interactions are not necessarily created equal. As humans we have highly-developed capacities to perceive, interpret, and make meaning out of our surroundings. In traditional desktop computing, as well as touch screen computing, devices tend to leverage only our most basic capacities for seeing and touching. These characteristics do not make an interaction virtual, they do not make it intangible, but they do make it physically impoverished.

This was the big surprise the boys and I encountered over the course of this project. We initially set out to explain why physical interactions were more fulfilling than virtual ones, and how the traditional screen, keyboard and mouse ignored all but the most rudimentary human capabilities for interacting with the world. What we realized was that we couldn’t merely categorize some interactions as physical and the other ones as virtual, because all interactions are necessarily situated in and mediated by the physical world. ‘Virtual’ is a convenient conceptual metaphor for describing a certain class of interactions, those that evoke only a limited set of our physical and perceptual capabilities, but the notion of a disembodied virtual world independent of the physical world is absolute nonsense. Moreover, I believe the appeal of the ‘physical’ and ‘virtual’ metaphors, and the territorial battles that have been fought under their banners, have distracted us from far more important agendas.

Traditional desktop interactions are unsatisfying not because they are ‘intangible’ or ‘virtual’, but because they offer an impoverished physical interaction that does little to leverage our unique abilities to perceive, interpret, and make meaning from our surroundings. Tangible computing differentiates itself not because it offers a ‘physical’ representation of ‘digital’ information, but because it uniquely focuses on the tactile qualities of interaction, and the rich sensory experiences that the world can afford.

Ultimately, all interactions are tangible. By acknowledging the metaphorical barrier between the ‘physical’ and ‘virtual’ worlds as a false one, and instead focusing on our ability to deliver richly evocative interactions through these different interactive paradigms, we are empowered to build more compelling interactions.