For the last three years I’ve been maintaining all my projects and websites, including Daneomatic and Brainside Out, as well as I’ve Been To Duluth and Terra and Rosco and Siskiwit, in a single Subversion repository. At any given time I find myself tinkering with a number of different projects, and honestly it keeps me awake at night if I’m not tracking that work in some form of version control. Given the number of projects I work on, and my tendency to abandon them and start new ones, I didn’t feel it necessary to maintain a separate repository for each individual project.

Subversion is, frankly, kind of a stupid versioning system, which actually works to the favor of someone wanting to manage multiple projects in a single repository. Since it’s easy to checkout individual folders, rather than the entire repository itself, all you need to do is create a unique folder for each individual project. Unlike Git, the trunk, tag and branches are just folders in Subversion, so you can easily compartmentalize projects using a folder hierarchy.

This approach creates a terribly twisted and intertwined history of commits, with each project wrapped around the other. My goal, however, was not necessarily good version control, but any version control at all. Like living, keeping multiple projects in the same repo beats the alternative.

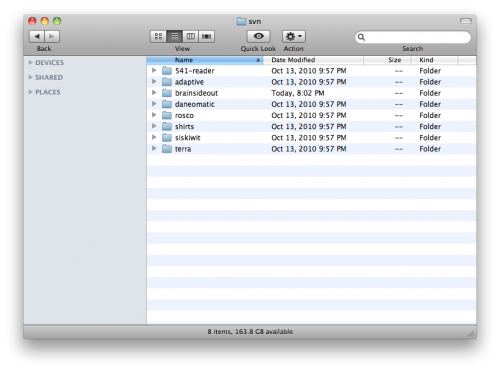

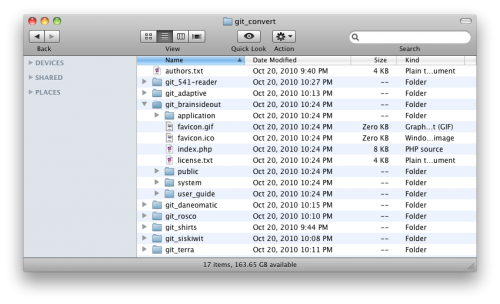

The folder hierarchy of my Subversion repository looks like this. Each project has its own folder:

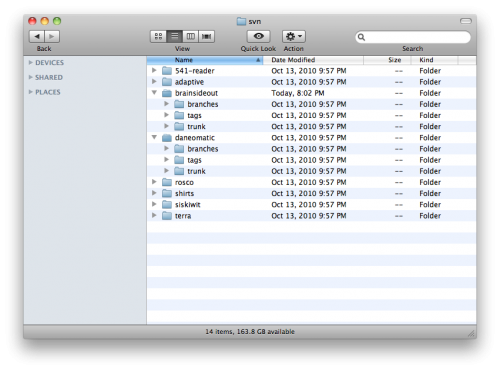

Within each project is the standard folder structure for branches, tags and trunk:

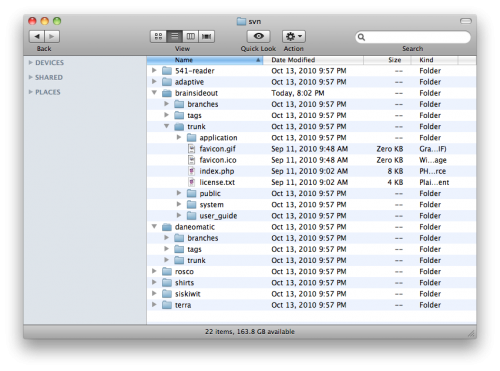

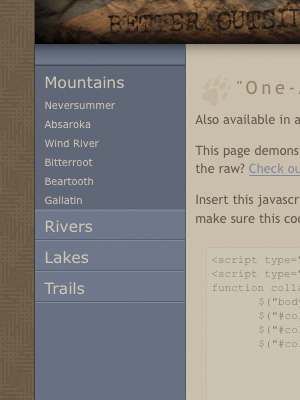

In the trunk folder is the file structure of the project itself. Here’s the trunk for one of my CodeIgniter projects:

While it’s generally bad practice to keep multiple projects in the same repository in Subversion, near as I can tell it’s truly a recipe for disaster in Git. Git is real smart about a lot of things, including tagging and branching and fundamentally offering a distributed version control system (read: a local copy of your entire revision history), but that smartness will make your brain ache if you try to independently maintain multiple projects in the same repository on your local machine.

And so it came to pass that I wanted to convert my single Subversion repository into eight separate Git repositories; one for each of the projects I had been tracking. There are many wonderful tutorials available for handling the generic conversion of a Subversion repo to Git, but none that outlined how to manage this split.

I hope to shed some light on this process. These instructions are heavily influenced by Paul Dowman’s excellent post on the same subject, with the extra twist of splitting a single Subversion repository into multiple Git repositories. I would highly recommend you read through his instructions as well.

First things first: Install and configure Git.

First, I installed Git. I’m on OS X, and while I’m sure you can do this on Windows, I haven’t the foggiest how you would go about it.

After installing Git I had to do some initial global configuration, setting up my name and address and such. There are other tutorials that tell you how to do that, but ultimately it’s two commands in Terminal:

[prompt]$ git config --global user.name "Your Name"

[prompt]$ git config --global user.email [email protected]Also, I needed to setup SSH keys between my local machine and a remote server, as the ultimate goal of this undertaking was to push my Git repositories to the cloud. I have an account at Beanstalk that allows me to host multiple repositories, and they have a great tutorial on setting up SSH keys in their environment. GitHub has a helpful tutorial on SSH keys as well.

Give yourself some space.

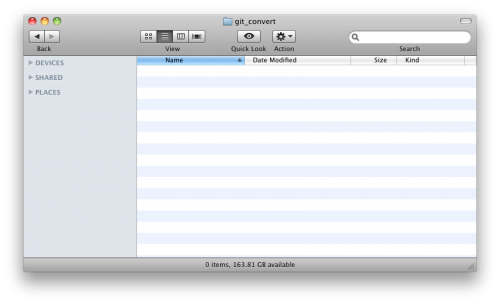

Next, I created a folder where I was going to do my business. I called it git_convert:

Then, I created a file in git_convert called authors.txt, which maps each user from my Subversion repository onto a full name and email address for my forthcoming Git repositories. My authors.txt file is super basic, as I’m the only dude who’s been rooting around in this repository. All it contains is this single line of text:

dane = Dane Petersen <[email protected]>Now crank that Soulja Boy!

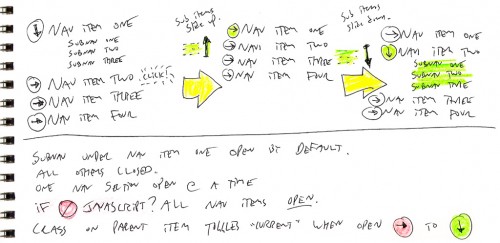

Now comes the good stuff. The git svn command will grab a remote Subversion repository, and convert it to a Git repository in a specified folder on my local machine. Paul Dowman’s tutorial is super handy, but it took some experimentation before I discovered that git svn works not only for an entire repository, but for its subfolders as well. All I needed to do was append the path for the corresponding project to the URL for the repository itself.

What’s awesome, too, is that if you convert a subfolder of your Subversion repository to Git, git svn will leave all the other cruft behind, and will convert only the files and commits that are relevant for that particular folder. So, if you have a 100 MB repository that you’re converting to eight Git repositories, you’re not going to end up with 800 MB worth of redundant garbage. Sick, bro!

After firing up Terminal and navigating to my git_convert directory, I used the following command to clone a subfolder of my remote Subversion repository into a new local Git repository:

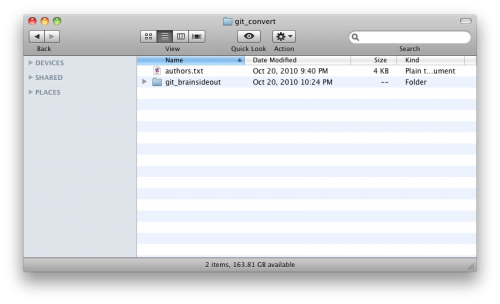

[prompt]$ git svn clone http://brainsideout.svn.beanstalkapp.com/brainsideout/brainsideout --no-metadata -A authors.txt -t tags -b branches -T trunk git_brainsideoutAfter some churning, that created a new folder called ‘git_brainsideout’ in my git_convert folder:

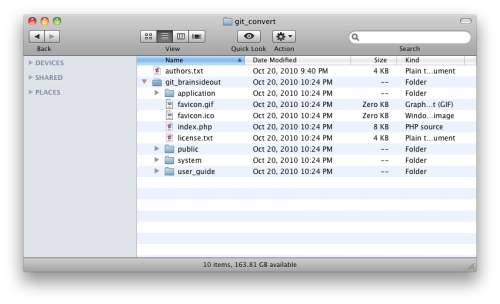

That folder’s contents are an exact copy of the corresponding project’s trunk folder of my remote Subversion repository:

You’ll notice that the trunk, tags and branches folders have all disappeared. That’s because my git svn command mapped them to their appropriate places within Git, and also because Git is awesomely smart in how it handles tags and branches. Dowman has some additional commands you may want to consider for cleaning up after your tags and branches, but this is all it took for me to get up and running.

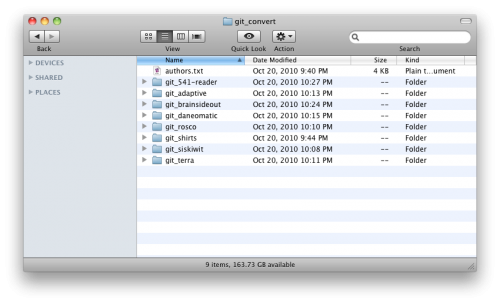

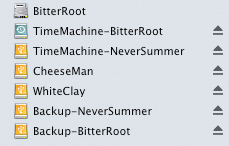

Using git svn in the above manner, I eventually converted all my Subversion projects into separate local Git repositories:

Again, the trunk, tag and branches folders are gone, mapped and replaced by the invisibly magic files of Git:

Push your efforts into the cloud.

I had a few empty remote Git repositories at Beanstalk where I wanted all my hard work to live, so my last step was pushing my local repositories up to my Git server. First, I navigated into the desired local Git repository, and setup a name for the remote repository using the remote command:

[prompt]$ git remote add beanstalk [email protected]:/brainsideout.gitI had previously setup my SSH keys, so it was easy to push my local repository to the remote location:

[prompt]$ git push beanstalk masterBam. Dead. Done. So long, Subversion!

For more information on how to get rollin’ with Git, check out the official git/svn crash course, or Git for the lazy.

Happy Gitting!